A recently published article in Science discusses findings from a study done on the Thomson Reuters Journal Impact Factor (JIF).

The study concluded that “the [JIF] citation distributions are so skewed that up to 75% of the articles in any given journal had lower citation counts than the journal’s average number.”

The impact factor, which has been used as a measurement tool by authors and institutions to help decided everything from tenure to allocation of grant dollars, has come under much criticism in the past few years. One problem associate with impact factors, as discussed in the Science article, is how the number is calculated and can be misrepresented.

Essentially, the impact factor of a journal is the average number of times the journal’s article is cited over the past two years. However, this number becomes skewed when a very small handful of papers get huge citation numbers, while the majority of papers published get low or no citations. The study argues that because of this, the impact factor is not necessarily a reliable predictive measure of citations.

The second problem discussed in the study is the lack of transparency associated with the calculation methods deployed by Thomson Reuters.

But, no matter what happens with the JIF, as David Smith, academic publishing expert, says in the article, the true problem isn’t with the JIF, it’s “the way we thing about scholarly progress that needs work. Efforts and activity in open science can lead the way in the work.”

Learn more about ECS’s commitment to open access and the Society’s Free the Science initiative: a business-model changing initiative that will make our research freely available to all readers, while remaining free for authors to publish.

UPDATE: Thomson Reuters announced on July 11 in a press release that the company will sell its Intellectual Property & Science business to Onex and Baring Asia for $3.55 billion. Learn more about this development.

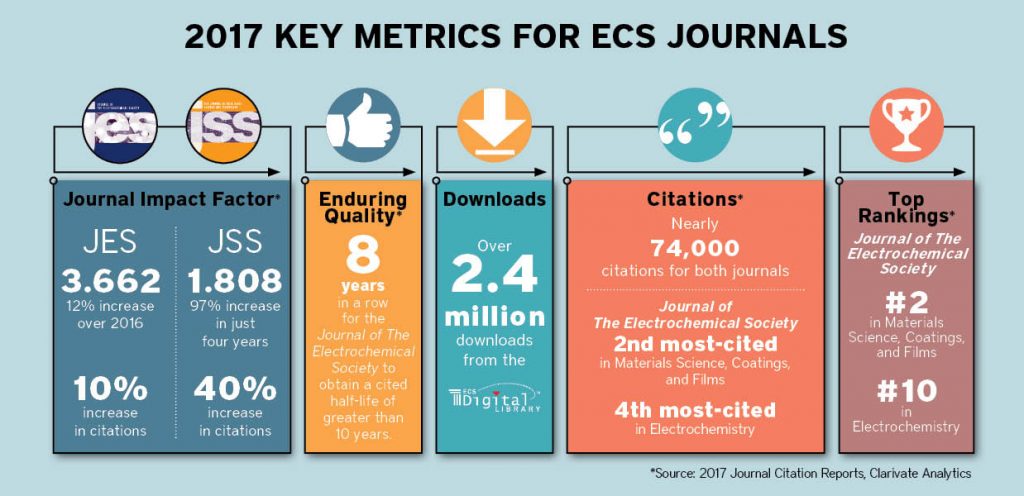

The journal impact factors (JIFs) for 2016 have been released, and ECS is pleased to announce that the JIFs for the

The journal impact factors (JIFs) for 2016 have been released, and ECS is pleased to announce that the JIFs for the